by Jason Rebholz, Chief Information Security Officer, Corvus Insurance

Organizations are finding innumerable ways to incorporate generative artificial intelligence (GenAI) and large language model (LLM) technologies to speed up processes, gain efficiencies, eliminate repetitive, low-value tasks and enhance knowledge, among other uses. As organizations seek efficiencies in their operations, so do cyber criminals who want to enable faster, more sophisticated attacks, phishing scams and malware deployments.

The rapid development of GenAI and LLM has created fear and uncertainty around current and future capabilities of cyber criminals to leverage these technologies in their attacks. However, while concerns are currently elevated to unrealistic levels, it is imperative organizations have the right security defenses in place to mitigate near-term and future threats.

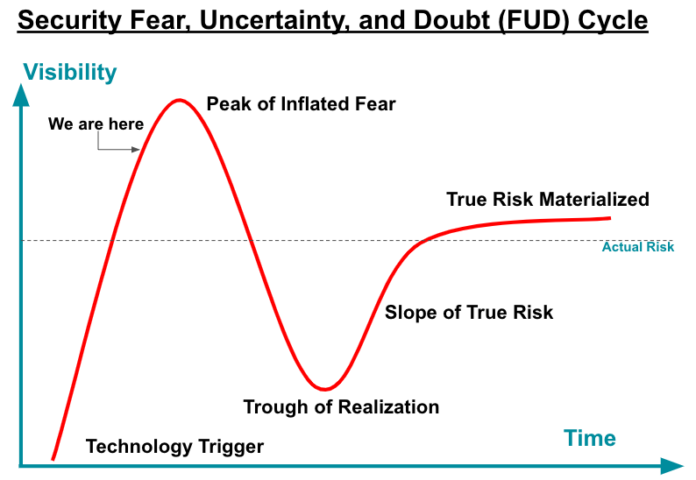

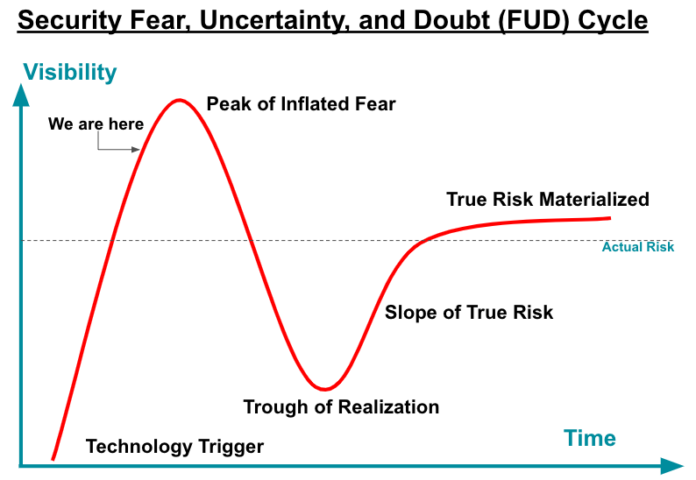

Security and the Fear, Uncertainty and Doubt Cycle

The security industry tends to follow a fear, uncertainty and doubt (FUD) cycle with any new hacker tooling or capabilities that are released. These fears are often exaggerated at first when imagination outruns reality. The actual risk levels associated with the discoveries often take time to materialize.

We are near the peak of inflated fear and misinformation when it comes to the advancement of these GenAI- and LLM-based hacking tools. While no one can predict how rapidly the industry (and hackers) will move through this cycle, there is some time before true risk materializes and hackers begin widely and successfully using the tooling capabilities in their attacks. This leaves defenders time to properly assess the threat and implement the most effective defenses.

Current State of Malicious GenAI Tooling

Near-term applications of GenAI on hacking toolkits will not have a material impact on the frequency and severity of cyber attacks. Despite the hype around them, popular hacking-related GenAI tools like WormGPT, FraudGPT, DarkGPT and DarkBARD have had limited success and most have already collapsed.

Malicious GenAI tooling is currently limited in both availability and functionality. Existing tooling is based on ChatGPT-like technologies and trained on a limited dataset, serving as a basic knowledge repository for hacking techniques and useful to automate certain micro tasks of an attack.

- Streamline social engineering tactics – Improvements to phishing emails and text messages and the creation of phishing websites

- Basic coding support – Creation of scripts to automate various tasks and the limited creation of generic malware

The barriers to entry to develop and deploy quality GenAI tools is significant and will act as a deterrent to hackers in operationalizing the technology.

- It’s been reported that OpenAI spent more than $500 million USD to develop and train ChatGPT.

- Few to no hacking groups outside of nation state threats have these resources readily available to invest in research and development on a scale that would be as reliable as ChatGPT.

It’s also important to understand what these tools will not do today:

- Revolutionize how attacks happen – Similar to existing GenAI tools, their function is to assemble existing information. Existing data is on existing attacks, which limits tools’ ability to think more creatively about new ways to attack.

- Make every human a “super hacker” – Existing information is readily available in leaked playbooks that are easily downloadable. Said another way, this information has been accessible for everyone and has not had a similar impact. An existing level of knowledge of how systems and networks operate is required in order to apply the knowledge.

Minimal Impact Near-term

While the advancement of malicious GenAI tools seems rapid, the evolution of these tools has been in the works for years. The most notable immediate impact will be in supporting the rapid creation and dissemination of disinformation, including fake images, videos and text to support disinformation campaigns.

When exploring the impact on cyber attacks, even with current iterations, the possibility that their adoption will improve cyber attacks is primarily limited to the potential for increased social engineering effectiveness—phishing emails, text messages and websites.

While it’s fair to expect incremental improvements in these capabilities, current studies show that human-created phishing emails outperformed GenAI-created ones by 45 percent. This will likely change over time.

Near-term, there is no immediate shift in how organizations should think about preventing cyber attacks. But what about down the road?

The Future – True Risk Materializes

Future GenAI capabilities will drive better attack efficiency for hackers through automation. Capabilities will grow incrementally over time (e.g., years) due to the level of expertise and resources required to build and maintain advanced tooling.

When looking at the application and effectiveness of GenAI for malicious purposes, the likelihood of success lies in the ability to fund the research, build the tools and gain access to data needed to train it. As has happened historically, the pace of attacker adoption of tooling / technology will follow the release of tools from security researchers who open source their tooling. Over time, additional hacking groups focused on financial gain will get access to this tooling. Security researchers have started to test GenAI in tools, but there have not been any ground-breaking discoveries or use cases to date.

What Should Organizations Do to Mitigate Risk?

GenAI offers threat actors a step forward for improved attack efficiency, but in the short term, the hype and misinformation may be unnecessarily sounding alarm bells within organizations. Companies need to stay the course with proper risk mitigation strategies.

- Protect identities – Attacks are targeting user credentials to gain access into environments. Securing user identities with passkeys or other FIDO2 technologies while applying Zero Trust principles to access can help stop attacks before they begin.

- Protect endpoints – Ensure endpoints are monitored and secured with EDR technologies. These should be fully deployed through the environment and monitored 24×7 to quickly respond to and contain attacks.

- Resilient environments – Assume failure of existing security controls and build your environment in a way that it can withstand an attack. This includes immutable backups and network segmentation that mitigate the blast radius of an attack and provide quick recovery.

The same security defenses that we have been urging organizations to implement for years are just as effective now—when deployed fully and correctly—as they were before. Even if the bad guys come up with GenAI-driven “new tricks” faster, the same defenses will be effective for attack prevention.