Kurt Neumann is a Security Architect for Cisco Threat Analytics and the Co-founder of the private cloud solutions company Antsle, Inc. He recently joined Cybersecurity Insiders as a panelist for our webinar The Importance of Network Traffic Analysis (NTA) for SOCs. Our discussion touched on the rise in encryption and the new Transport Layer Security protocol, TLS 1.3, and what it means for cybersecurity. It’s a topic that comes up often, so we invited Kurt back for an interview to explore the issue in more depth.

Key Takeaways

Part 1:

- We are heading toward full-encryption environments, including both defensive and malicious use of encryption

- The new TLS 1.3 protocol will significantly strengthen data security and privacy

- But, TLS 1.3 will also take away some tools we’ve relied on for traffic visibility

Part 2:

- There are some workarounds for these tools, and there are new strategies, like network traffic analysis with deep packet inspection (DPI), that can help retain visibility in encrypted environments

- Adapting to expanded encryption and TLS 1.3 won’t be easy for cybersecurity specialists, but it is doable, and adoption needs to start now

Part 1

Holger Schulze, Cybersecurity Insiders (HS): In our recent Network Traffic Analysis survey, an overwhelming 94% of respondents said they considered it “very” or “moderately” urgent to gain greater insight into encrypted network traffic. I believe you said that didn’t surprise you at all. Am I remembering that correctly?

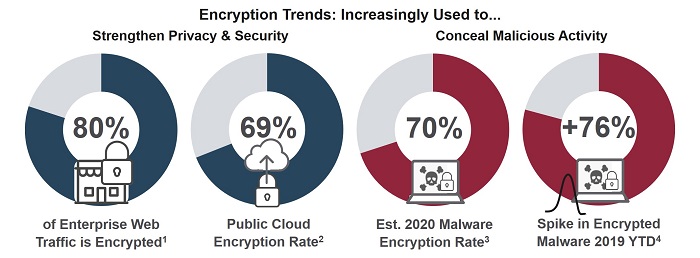

Kurt Neumann (KN): Absolutely. Visibility into encrypted traffic is a problem for everyone. Encryption of enterprise web traffic is expected to hit 80% this year, and the use of encryption to cloak malicious activity is fast approaching the same ratio.

Most people, though, don’t really know exactly what percentage of their global traffic is encrypted, though the ratio of ‘good’ encryption to ‘bad’ encryption is probably about even. But, whether the encryption is good or bad, you can’t manage what you can’t see!

And it’s so easy for the bad guys to write or buy malware these days. Do you remember the case of the young Romanian couple who hijacked the Washington, DC surveillance cameras just days before Trump’s inauguration?

Taking over cameras wasn’t their intent; they were just small-time scammers phishing for a ransom. They bought encrypted, plug-and-play malware and an email list off the dark web and turned them loose. Unfortunately, a DC police employee happened to be on the list and took the bait, setting off this mad Secret Service scramble in the world’s most powerful nation.

Obviously, there’s no shortage of similar high-profile encrypted attacks by both amateurs and pros. So, it’s not surprising that enterprises want more visibility over what’s happening on their networks. It’s not the way anyone wants to wind up in the headlines.

And of course there are many non-security needs that require visibility too – troubleshooting, performance management, priority traffic handling. But when it comes to visibility, encryption is a real barrier.

HS: But right now companies can get around the encryption blind spot using an SSL inspection proxy server, right?

KN: Yes, but many don’t. There are a lot of reasons for this: resource issues, performance or privacy concerns, regulatory constraints… But there are a lot of large organizations that are using decryption.

They’ve deployed TLS intercept applications [TIAs] that use proxy servers to offload copies of encrypted data for decryption and analysis, or inline man-in-the-middle-style solutions to decrypt, inspect and re-encrypt streaming traffic. There are two problems with this going forward, though.

One, regulations like GDPR in Europe and CCPA in California prohibit routine decryption. It has to be very specific and strictly justified. Second, the new TLS encryption standard – TLS 1.3 – is going to knock out the traditional out-of-band proxy decryption many are using.

HS: How so?

KN: With conventional out-of-band proxy servers, knowing the private key was all you needed to decrypt the session and the payload – any time you wanted. With TLS 1.3, that’s no longer possible.

Encryption is enforced from source-to-termination, certificates are encrypted, and the keys are ephemeral. They’re session based. There’s no static key to hang on to and use as the key to the kingdom whenever you want.

However, if you’re using a full man-in-the-middle style proxy on-premises or in the cloud, meaning you appear as the termination, and you’re using decryption within relevant regulatory boundaries, you’ll be fine – mostly.

I say ‘mostly’ because you, or your security solution provider, are going to have to address some performance issues associated with TLS 1.3’s stronger encryption, even though 1.3 streamlines and speeds up the handshake process. And you’re going to have to tackle some complex technical issues associated with the revised architecture. For some this will all be manageable, for others, it may not be so easy.

HS: And if you’re using out-of-band decryption, what then?

KN: If you’re using a conventional out-of-band decryption solution, you’re generally out of luck. And if you’re relying on metadata from basic network monitoring only for visibility – no decryption – you’re definitely out of luck.

Because TLS 1.3 encrypts certificates and other session data, regular sniffers aren’t going to have much to pick up. If encrypted traffic is coming in and out as DNS traffic over HTTPS, you’re not going to get anything. No DNS. No URLs. No anything.

[Source: CC BY-SA 2.0, Robert Bjoerken]

HS: For out-of-band decryption, you said “generally” out of luck. Are there workarounds?

KN: Well, you could shift the burden to endpoints. Anti-virus software has really evolved, and endpoint features are really important. It’s maybe the strongest tool – for now. So you could theoretically put some kind of sandboxing and deep packet inspection there.

But it’s really expensive to do endpoint defense, assuming you can even get a handle on all your devices. Think how heavy some endpoint systems are now, and what they’ll be like if you load them up with even more resource-consuming tools.

But let’s just say you do have control over all the endpoints in your network. As an AV alternative, you could share endpoint session data with your TIA so it can run decryption, or maybe you could access a key debugging file to get your hands on the key you need. Neither is perfect, and both would require additional security safeguards for the data transfers.

However, I don’t know anyone who has full legal and technical control over all their endpoints, so I don’t see a universal solution. You could cover certain assets and cloud or on-premise segments with workarounds, but you can’t avoid additional complexity.

For instance, even if you’re using an inline system tool that doesn’t intercept TLS, you still need some mechanism to get the standard X.509 formatted data used to exclude certain types of sessions from decryption due to regulation, or to otherwise whitelist or blacklist sessions so you can manage the workload without everything grinding to a halt.

Without such a mechanism, you’ll have to establish a full TLS session to extract the certificate details and then classify the session, which obviously defeats the purpose. At that point, you’re decrypting everything, which is awful for privacy, compliance and performance, so it’s essential to categorize the traffic without establishing a full session.

One other out of band option would be to force a downgrade of TLS 1.3 to TLS 1.2, which is what some are doing now, but there are security issues with that, and the days of being able to do so will close as 1.2 is deprecated.

In Part 2 of this interview, we will discuss the pace of TLS 1.3 adoption and how DPI-based Network Traffic Analysis can deliver visibility into encrypted traffic without decrypting it.

- Gartner, Inc.

- Ibid, Gartner.

- SonicWall, Inc.

- Ibid, SonicWall